OoD Prediction for Emotion Transfer

A transfer learning project using Task and Text Embeddings to predict model performance across different emotion classification datasets (Out-of-Distribution, OoD).

More About the Project

Python | DeBERTaV3-base | Task Embeddings | Transfer Learning

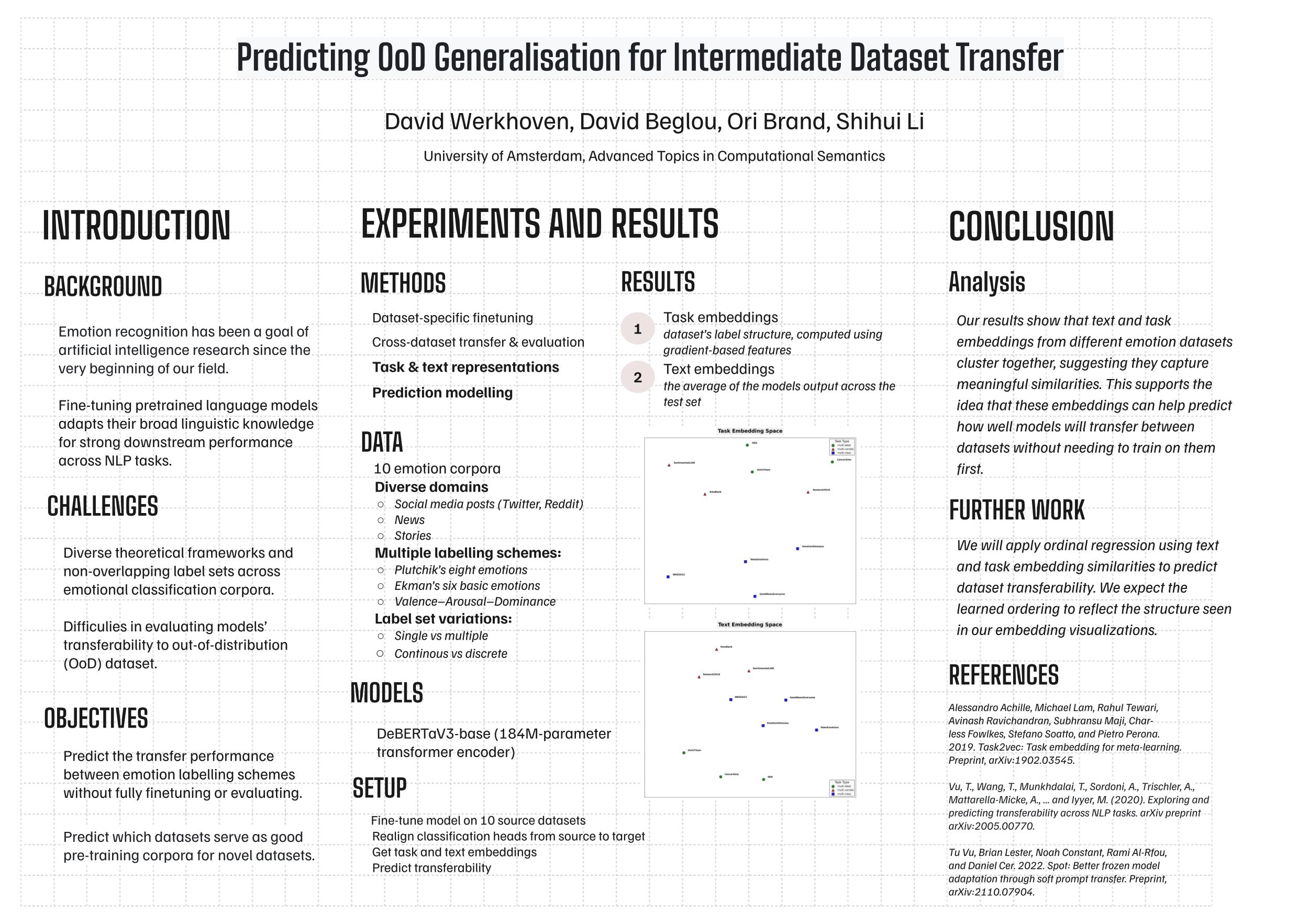

The core challenge addressed is the difficulty of generalizing emotion classifiers across datasets due to varied labeling schemes. This project predicted the effectiveness of intermediate dataset transfer by building a regression model. The prediction variables were based on the similarity between datasets, quantified using class-conditional Task and Text Embeddings derived from gradient information, to determine which datasets serve as effective pre-training corpora.

Technical Report

My Contributions

Transfer Learning and Model Prediction

Designed a pipeline to predict transfer performance (OoD Generalization) between emotion datasets without requiring full model evaluation.

Fine-tuned a DeBERTaV3-base transformer encoder on 10 source emotion datasets in isolation to learn dataset-specific text representations.

Developed a regression model to predict transferability loss based on dataset similarity metrics.

Advanced Representation Learning

Implemented the construction of Task Embeddings using the squared gradient norm of the frozen encoder to capture dataset-specific structure.

Created class-conditional representations for each emotion in each dataset, using both Task and Text Embeddings to quantify dataset similarity.

Data Analysis and Visualization

Applied dimensionality reduction techniques to visualize the constructed Task Embeddings and discover structure within different emotional classification corpora.

Addressed challenges from diverse theoretical frameworks and varied, non-overlapping label sets across multiple emotional classification corpora.